Technology Considerations

This section describes considerations for usage and adaptation of the Sample Solution.

Deployment Options

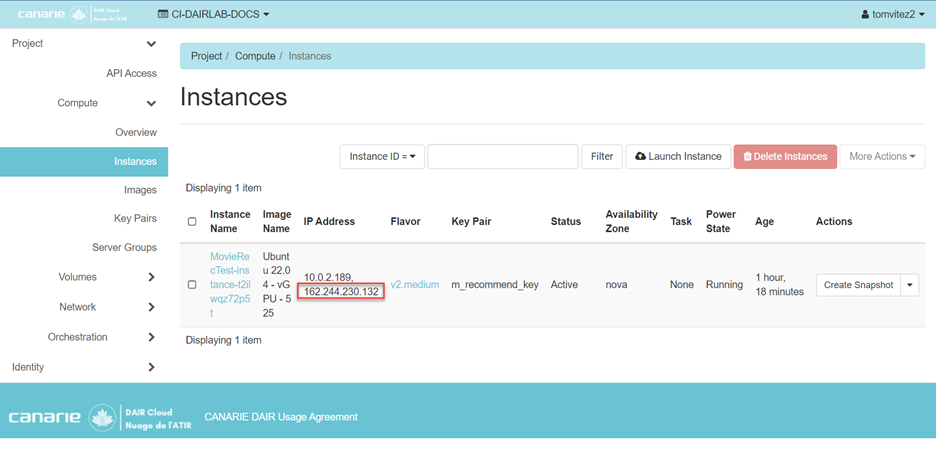

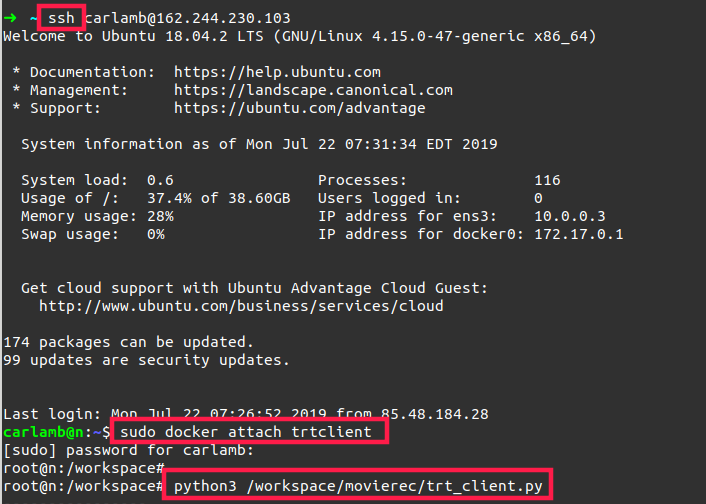

The Sample Solution is deployed to a TensorRT inference server, but the TensorFlow model could be run directly production without exporting the model and running a TensorRT inference server. However, TensorRT is in general more efficient on GPUs and the predictions are in general faster than other alternatives.

Moreover, in this example, for simplicity, both the client and the inference server run on the same host. But typically, they would run on different hosts exposing the appropriate ports between them.

Technology Alternatives

An alternative to TensorFlow to build and train the model is PyTorch. It is another popular framework for machine learning using Python that is also compatible with the TensorRT inference server. There are many comparatives online regarding when to choose one or the other, some are Awni Hannin: PyTorch or TensorFlow?, TensorFlow or PyTorch: The Force is Strong with Which One?, PyTorch vs. TensorFlow — Spotting the Difference and The Battle: TensorFlow vs Pytorch.

Data Architecture Considerations

The Sample Solution relies on a dataset that is in this case, implemented as a single text file. There are several considerations regarding the data, the pieces of code that rely on it, how to extend it, and best practices.

The two main components that directly use the dataset are the data pipeline to load it into memory for training and the client to map predictions to actual movie names. Those are the pieces that would need updates if the data architecture changed. For example, the data could be stored in a database and therefore, the data pipeline would need to either dump the database to a file before running the current pipeline or directly query the database system from the code. As examples for the client code, the implementation could load the data to a hash map from a file or from a database or could query a database on every request.

To extend the data and incorporate new users or movies to the solution, it is necessary to retrain the model. A production solution would incorporate periodic retraining of the model and would provide personalized recommendations only after users have made enough use of the system to collect the data. Typically, similar projects incorporate generalized recommendations of popular items before providing personalized ones.

Finally, since the data may contain users’ information, developers implementing a similar solution should follow standard practices for critical data management. For instance, the training component only needs identifiers, not the specific users or items information. However, during prediction time, the client would need to identify a user (for example, a login), translate to an ID, and do the same for movies. Therefore, similar solutions should consider standard industry best practices in the components that collect and store data from users, export data for model training, and query data to translate IDs to plain text.

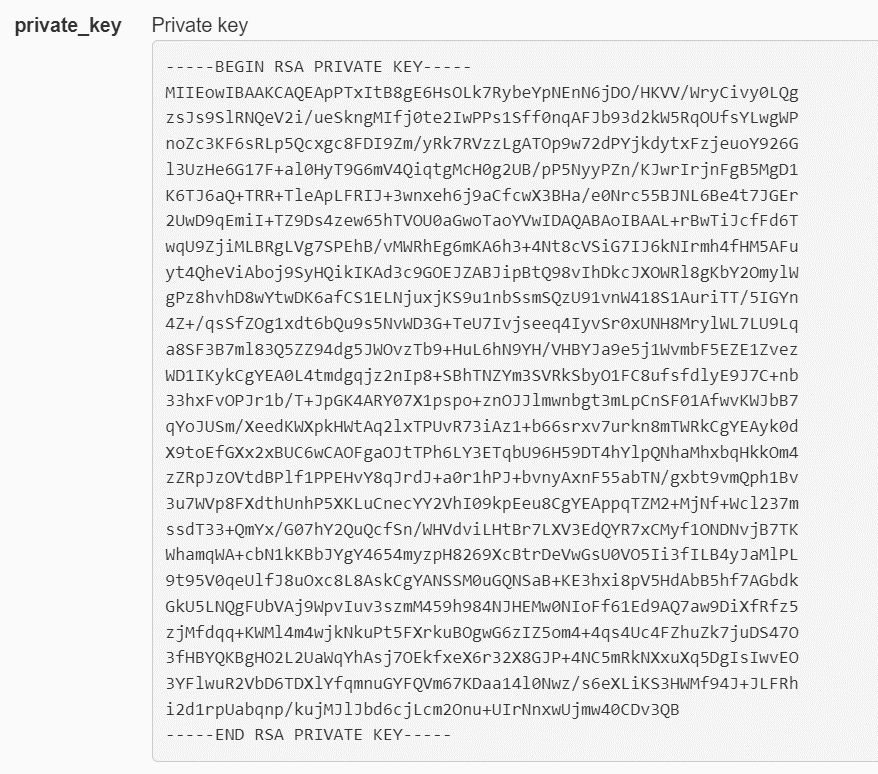

Security Considerations

Once deployed, there is a minor risk that bad actors could gain access to the Sample Solution environment and modify it to mount a cyber attack (for example, perform a DoS attack). In order to mitigate the risk, the deployment scripts follow DAIR security ‘Best Practices’, such as:

- firewall rules restricting access to all ports except SSH port 22 on the deployed instance.

- access authorization control allows only authenticated DAIR participants to deploy and access an instance of the Sample Solution.

In addition, to limit security risks please follow the recommendations below:

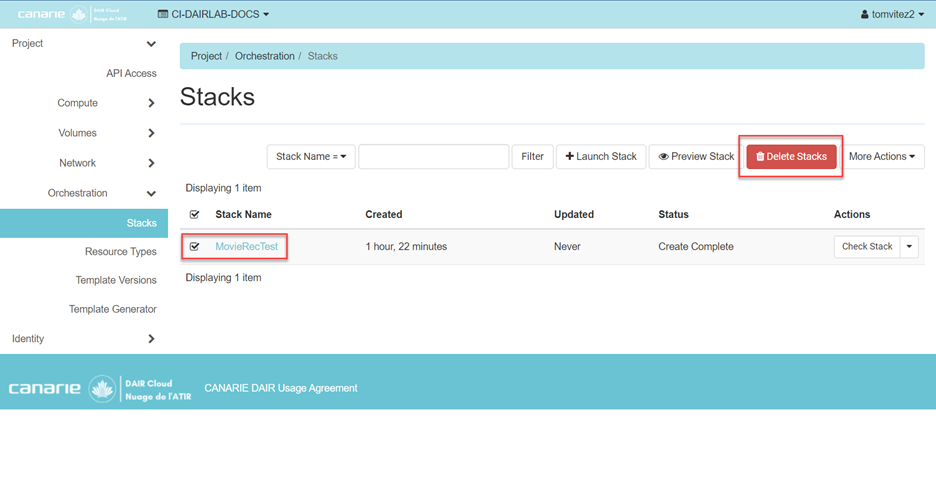

- use security controls ‘as deployed’ without modification

- when finished using the reference solution, proceed to terminate the app (see section about termination earlier in this document).

Finally, as a stand-alone application, the reference solution does not directly consume network or storage resources while running. As such, those resources do not need any explicit control procedures.

Networking Considerations

The inference server can be queried by clients using HTTP or gRPC (Google Remote Procedure Call) protocols. There are not any specific networking considerations to highlight.

Scaling Considerations

This Sample Solution uses a stateless model. That means that the same model can be deployed to many inference servers, implementing a standard highly scalable architecture where many requests can be sent in parallel and a load balancer spreads them through the inference servers.

Availability Considerations

The TensorRT inference server provides a health check API that indicates if the server is able to respond to inference requests. That allows the inference server to be included as any regular host in a highly available architecture, where the health check can be used by a load balancer to shut forward the requests, replace the host, or start a new one.

User Interface (UI) Considerations

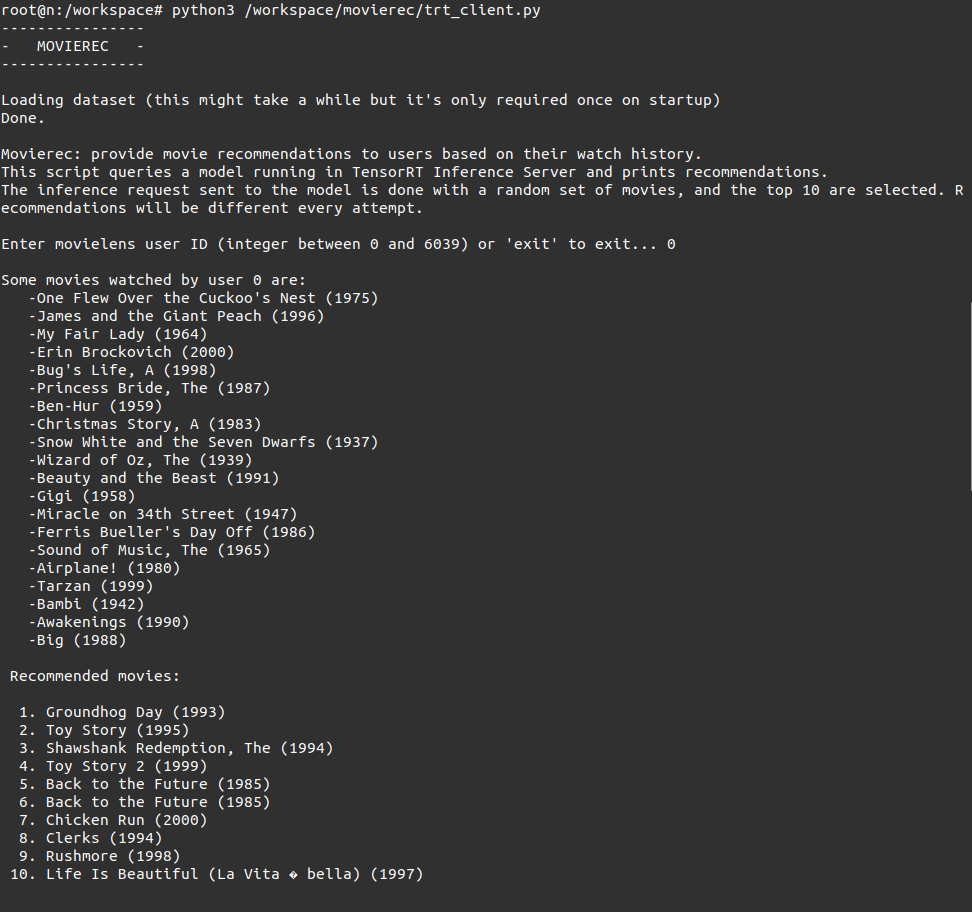

The Sample Solution only provides a simple command-line interface meant to showcase the backend. The UI would depend on the specific application and it is out of the scope of this example.

API Considerations

The code is regular Python code, it is organized in a modular manner, and includes extensive code comments. Thus, developers can easily extend it to create custom solutions.

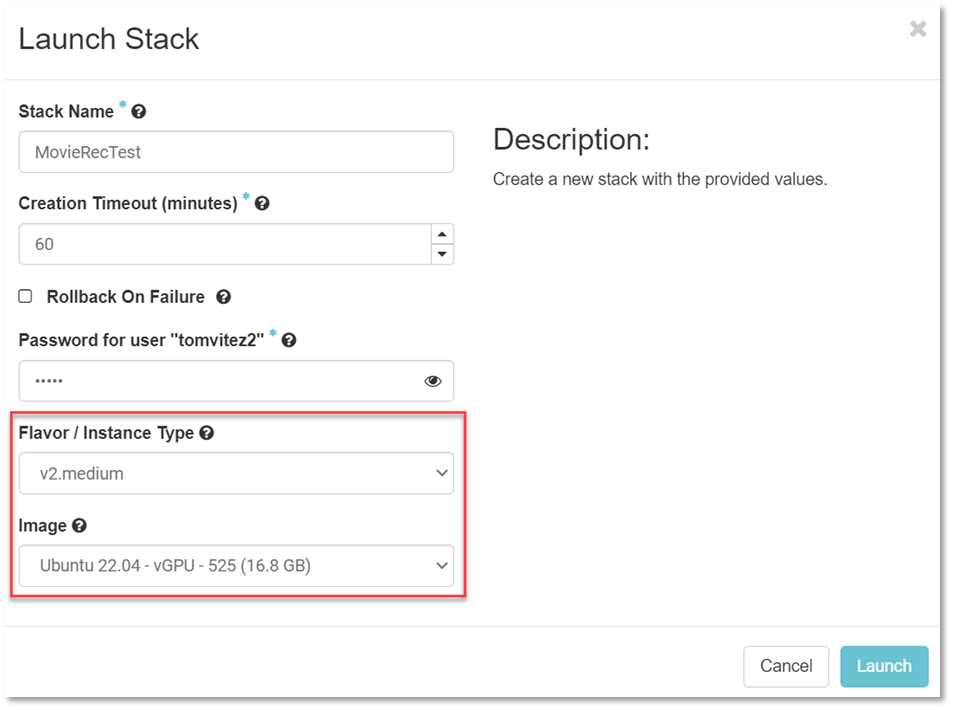

Cost Considerations

This solution requires a single GPU instance in DAIR, whose equivalent value is approximately $100 / month in a public cloud.

License Considerations

All the libraries used in this sample solution are open source. The Movie Recommender code itself is as well open source. The MovieLens dataset is available for non-commercial use and under certain conditions. See detailed licensing information below. If you plan to use, modify, extend or distribute any components of this Sample Solution or its libraries, you must consider conformance to the terms of the licenses:

Source Code

The source code for the solution is available at: https://code.cloud.canarie.ca:3000/carlamb/MovieRecommender and is available to DAIR participants. Please, refer to the README.md file for instructions on how to clone and use the repository.

Glossary

| Term |

Description |

| API |

Application Programming Interface. |

Collaborative Filtering

|

Collaborative Filtering is a technique used by Recommender Systems to make automatic predictions about the interests of a user by collecting preferences or ratings from many users (collaborating). |

CUDA

|

Compute Unified Device Architecture. It is a parallel computing platform and programming model from NVIDIA. |

| DAIR |

Digital Accelerator for Innovation and Research. This document refers to the DAIR Pilot released in Fall 2019. |

Deep Learning

|

Deep learning is part of a broader family of machine learning methods based on artificial neural networks. |

| GPU |

Graphics processing unit. A hardware component with high performance for parallel processing. |

Recommender System

|

A recommender system or a recommendation system is a Machine Learning model that seeks to predict the “rating” or “preference” a user would give to an item. They provide suggestions of relevant items to users. |

| UI |

User interface. |