Encryption, isolation, authentication, and monitoring are the cornerstones of secure architecture in the cloud or in a data centre. Organizations that are starting a cloud deployment project are confronted with several challenges, with security being one of the largest. Whether your organization is moving to leverage the cloud or starting anew; managing and mitigating risk is no small task.

Read more about Cloud Security Best Practices

Our goal for this tutorial is to give you a solid foundation for achieving a good security posture in a modern cloud environment. We’ll address the key topics: cloud architecture, authentication, networking, logging, and how applications work with these components to empower you and your team to ask the right questions when designing your project’s cloud infrastructure. Later in the tutorial we will cover some common security models that you can apply.

To illustrate these concepts, we will use a fictional company named “Pretendco”. They are a young startup with just 5 employees in the data science space, looking to create and share a machine learning model with clients who can upload an image to their service and find out if the image is a cat or not.

Authentication and Authorization

Swiss Cheese Model (Defense in Depth)

Where to Start

The security of a project is a vital element and should be considered as early as possible during the planning stages. The best way to approach this process is to examine which aspects of your project must be secured. You should keep security in mind when making decisions regarding:

- your architecture,

- the data collected and/or used, and

- any element of your project that could be intentionally or unintentionally compromised.

By confirming the assumptions made during the creation of your project, we will be able to make note of where to ensure sufficient controls are in place to mitigate risk.

For Pretendco, we will assume the minimum security requirement includes securing their cloud infrastructure hosting their training data and models. It also includes securing their external or public facing elements – how are clients able to send their data safely and securely to Pretendco for analysis.

Sensitivity and Risk Analysis

We recommend using three main categories; Sensitivity, Threat, and Economic Impact when looking at your services, the data they transmit and store, and otherwise the general lifecycle of your data. Understanding the importance of each component will help guide you in determining the tradeoffs necessary to keep your data, your project, your company, and your users safe.

The exact steps and process for doing this analysis are out of scope of this document, but we recommend resources such as this article from Intel, or this article from the Information Systems Audit and Control Association (ISACA) for a more in-depth approach to risk analysis.

First Components to Analyze

There are a number of common sections of a deployment that are important to analyze to determine how best to manage security. Later in this tutorial, we will look deeper into these 6 categories and cover some of the different approaches that can be combined to mitigate your risk.

These 6 categories represent key segments of a deployment:

- Authentication and Authorization – How are you ensuring that the right people are accessing the right data both internally and externally?

- Data Protection – How are you ensuring data is protected?

- Network Access – How openly available is this service and its components? Should they be?

- Software Security – How are you ensuring that security updates are applied and known vulnerabilities mitigated or patched?

- Configuration Management – How are you ensuring that your configuration is as you expect it to be, or how easy is it to update if an error is found?

- Logging and Visibility – How are you monitoring for unexpected operations?

Another key consideration is that the most common flaws and exploits are not the ones that make Hollywood blockbusters. Based on yearly security breach and incident reporting, the most common attack patterns include items like man-in-the-middle attacks, denial of service, privilege misuse (exposed or weak credentials), software bugs, cross-site scripting, and ransomware.

Each type of attack requires a different mitigation approach, but there are commonalities and ways to preempt and mitigate these classes of attacks. These classes of attacks exist because it is easy to make a mistake in a complex system – and a cloud deployment is no different from an on-premise or other deployment in this way. Taking time to plan and understand the risks will pay dividends, as the reputation of the company and remediation costs are on the line. Few businesses can afford hours or days of downtime to fix a security problem, so early and comprehensive planning is critical.

For the purpose of this tutorial, we can make some assumptions about Pretendco’s risk analysis. Pretendco wants to ensure both their internal data and data provided by clients is kept safe. The degree of sensitivity of their data and program does not warrant additional measures such as air gapping or running disconnected from the internet. Considering their requirements for access and exposure, along with minimal data collection, Pretendco’s adherence to standard best practices should be sufficient.

What am I responsible for?

Before we go deeper into the 6 categories listed above, it’s important to explore where a cloud deployment differs or does not differ from a traditional physical deployment in a co-location facility or on-premise.

With cloud computing, there is a fundamental difference in terms of physical security. The cloud is like using a co-location facility, where you would host your equipment and ensure its physical security. Depending on whether you are leveraging services like Infrastructure as a Services (IaaS) such as Amazon EC2, or Software as a Services (SaaS) such as Amazon Redshift, there are some components that are no longer in your control. This tradeoff means you don’t need to incur the direct costs, but it also means you’re dependent on an outside party to ensure a fire, 3rd parties, or other entities do not gain access to your infrastructure.

It is key to note that while the running of a service may be outsourced, using the service correctly or ensuring it is properly secured is still something you have control over. For example, while Amazon Web Services may guarantee the physical security of your infrastructure, it is still up to you to ensure your API keys are securely stored and used as expected (in a controlled manner).

Now that we have confirmed what our responsibilities are and what elements we are able to control, we can focus on answering the question – how do we architect security in our deployment or application?

Authentication and Authorization

Authentication refers to proving an entity is who they say they are, while authorization is the permission system that confirms whether that person is allowed to perform the action they wish to perform or not. Ensuring that only authenticated and authorized users can access your services is a key step in risk mitigation.

Without correct authorization and authentication in front of services used within, by, or even running alongside your application, it’s easy for a mistake to occur.

As such, we recommend the following best practices:

- Don’t use simple passwords, don’t reuse passwords, and avoid using passwords in place of keys or certificates to permit authentication.

- Use Multi-Factor Authentication wherever possible for human logins.

- Leverage the Principle of Least Privilege – an account that is only able to do the bare minimum is a lot less likely to successfully execute commands that are unexpected.

- Avoid sharing accounts for users or services.

- Regularly review permissions and authorized users, and revoke unnecessary privileges.

- Deny by default – unless explicitly set to be allowed, unexpected behaviour is not allowed. This is frequently encountered with cloud providers and how firewall rules are initially set up, forcing you to manually permit/configure access to specific sources.

In our Pretendco scenario, this means our imaginary friends:

- Ensure that you can only log into their instances via SSH keys, so passwords can’t be guessed.

- Set up certain accounts (such as admin) on their web application with two-factor authentication. This could be done with third party vendors such as Authy, Auth0, or by using existing Time-based One-Time Password (TOTP) libraries.

- Ensure that passwords are not reused between services, should that password be used in an unrelated breach. HaveIBeenPwned.com is a fantastic resource for monitoring when credential-stuffing attacks may happen.

- Randomly generate passwords stored in password managers such as 1Password or Hashicorp Vault will help stymie dictionary-based attacks on services.

In the case of Pretendco, there are several additional approaches they could consider but might be deemed overkill for such a straightforward service. Approaches like the Zero Trust Model or Role Based Access Controls (RBAC) are well-used concepts for large enterprise businesses to better scale how authentication and authorization is used across an organization. Each of these approaches offers a slightly different way of limiting the power a bad actor can wield should they gain access via bad permissions, leaked passwords, or a lack of password protection.

Data Protection

Storing sensitive data, such as Personal Identity Information (PII) and credit card numbers, comes with legal liability. Encryption is a key component to keeping this data safe both when data is ‘in transit’ to clients or other services (e.g., via SSL/TLS), as well as when ‘at rest’ or stored (e.g., Disk Encryption).

Data that is ‘at rest’ outside your live environment, such as backups or assets stored in services like S3 and SharePoint, also need to be secured. Many cloud providers offer encrypted volume and object services. Automating this process makes securing sensitive data easier. Encrypted volumes are unrecoverable once deleted or if the encryption key is lost — also lowering the risk of accidental exposure.

Beyond encryption, abstracting PII in live databases helps separate sensitive data. “Tokenization” uses unique tokens instead of the PII itself. This facilitates splitting private data from non-private data and keeping it in a separate security enclave. The token is used to query the PII in the separate and secured service when needed.

Most cloud providers will offer the ability to encrypt your disk storage, and when transmitting data between services ensure that they are not done “in the clear” – but instead via HTTPS or other encryption to reduce the chance of data being stolen while being transferred.

In Pretendco’s circumstances this has meant:

- Ensuring that their training data is stored on an encrypted volume,

- Connections to their database are done via SSL/TLS, and

- Clients connect to their web interface via HTTPS to prevent the snooping of traffic.

Network Access

In all cloud models, the physical network is managed by the provider. This yields many of the same benefits found when offloading responsibility for physical compute or storage hardware. In other words, hardware-level failure and exploits are fully managed by your provider. However, the virtual networks used by the instances may require your attention.

Network configuration is a critical part of securing your architecture. A properly secured network helps limit exposure and reduce risk. This can be accomplished by leveraging private networks or subnets as security enclaves where only specific traffic is allowed. Firewalls and security groups may be created to limit access via the Principle of Least Privilege – ensuring that only the right IPs are able to talk to different services.

This can be as simple as ensuring that management ports (e.g., SSH) of your service is not wide open to the public internet to limit drive-by password hacks, or the security enclave where only database servers can talk to each other.

Finally, as part of Network Access – it’s important to look at leveraging encrypted communications standards such as SSH, or RDP or VNC with SSL enabled. If insecure communication is required, creating a secure tunnel via a VPN or other tool will help prevent third parties from snooping on your traffic.

With their web frontend and a single database backend, Pretendco can:

- Ensure that the database and its instance are only accessible from within their internal network, effectively hiding their database server from direct access from the outside world.

- Lock down all other ports on their web instance with the exception of just what clients need to access the service.

Software Security

Keeping your application’s components up-to-date is a challenge, both in and out of the cloud. Depending on your level of responsibility, you may need to manage updates for the OS, shared libraries, application frameworks, and of course, your application code itself. Out-of-date software is a major security risk and one of the leading avenues for remote code execution exploits. Once a vulnerability in a popular framework or library is public, bots will most often begin to scan web properties and IP ranges — looking for targets to exploit. This is why keeping software up to date is so important, especially in web applications.

Beyond updates, another important process is “hardening”. Default OS and service settings are not always suitable for a production environment. The hardening process updates the default configuration for services and underlying components (such as the OS and network stack), with a focus on security. The hardening process should be part of your development workflows.

A common way to ensure your settings are appropriate and secure is to perform a penetration test. Many open-source tools exist (such as Kali or OpenVAS) which allow you to run these tests internally, but hiring an outside professional or agency is highly recommended. Many vulnerability scans that are available with these tools are automated, but interpreting and acting on the results is not. With a little work, you can gain meaningful insights from the reports, but it is hard to replace the expertise of a trained professional.

To stay up-to-date, Pretendco has the following items enabled on their infrastructure. Each of these methods are used to keep different pieces of the infrastructure updated or hardened.

- Automatic security updates on packages from their OS provider. (e.g., Ubuntu Security Updates or Windows Security Update)

- Dependabot monitoring via GitHub to help alert when dependencies in the web application app are out of date, or have a vulnerability.

- As part of deployment, services that are not required to be running are turned off. By having fewer services running you are reducing the “attack surface area”, thereby increasing overall security.

Additionally, other applicable items from the Security Technical Implementation Guides are enabled – for example, ensuring only SSH keys to access the server, and turning on the firewall to prevent access to ports or services not meant to be publicly accessible.

Configuration Management

Configuration Management utilizes tools such as Ansible, Puppet, Chef, and others to ensure your infrastructure is in an expected configured state. Some tools will run automatically and continuously ensuring compliance, while other deployment tools will ensure compliance whenever a deployment is prepared.

By using configuration management (automation scripts) as another security preventive measure, human errors or mistakes due to misconfiguration, missed, or skipped steps become much less likely to happen. It will also save time in setting up your infrastructure and can set smart default values on infrastructure before it has the chance to interact with your application or your users.

Pretendco, in keeping with their smaller scope, have chosen to use their Continuous Integration and Delivery (CI/CD) system to run their Ansible playbooks once every 6 hours, or whenever the production branch is updated on their infrastructure to ensure that configuration has not drifted. It also provides them the ability to perform less frequent tasks such as renewing their SSL certificate, installing packages that fall outside of automated security updates, or keeping file permissions consistent. While each of these could be done as a separate step, making them part of their configuration management process ensures that whether it’s the first run, or the hundredth run the steps are repeatable providing a consistent outcome.

By effectively using configuration management, Pretendco ensures compliance, but also ensures they are better equipped to rebuild their infrastructure quickly should anything unfortunate occur.

Logging and Visibility

Centralized logging is fundamental to securing modern infrastructure and understanding a variety of issues. These can include access issues or errors when using a specific feature of the service. We look to logs across all components of the application to provide hints as to the root of the problem (e.g., user permissions, database connection errors, etc.).

With a central logging platform, it is easier to track events across various systems and services. It is also important because logs on a compromised system can be modified by bad actors.

For instance, the web application server logs contain some information while DB server/service logs contain info only pertaining to the DB access or internal DB errors. It is important to understand what the user was doing at the time when a DB error occurred.

For this to work well, error codes, metrics, and log formats should be reasonably consistent and meaningful. Most cloud environments offer centralized logging services, but third-party options (e.g., Loggly, Elasticsearch, Splunk, etc.) may have additional features useful to your team.

Beyond logging, active environment monitoring provides insights into the health and behaviour of your systems. This is particularly useful for alerting you to and resolving any issues that might occur. Active environment monitoring allows event-driven, high-availability solutions where application health can trigger automated remediation, such as rebuilding a service or removing a node from an active cluster. You should also configure alerts to notify you of abnormal resource usage or authentication behaviour. A good rule of thumb is that anything that impacts availability or may need manual intervention should be an alert, even if automated remedy procedures are in place.

For Pretendco, logs from each of their services are sent to a centralized rsyslog server, creating a single location to see all logs. This makes debugging and identifying security issues significantly easier. Should the need for log analysis become a bigger requirement, it will be much easier to use a tool such as Elasticsearch or Splunk with logs already centralized.

Architecture Tips

Security Aware Architecture

All security models involve layers of both connected and separated components. To ensure your architecture is security aware, it is important to consider the risk factors for your specific use case and confirm that your architecture includes the necessary checks and balances to keep your application, data, and users safe.

Each service or connection between your application’s underlying components presents an opportunity to explore and add a defensive layer to prevent unexpected use.

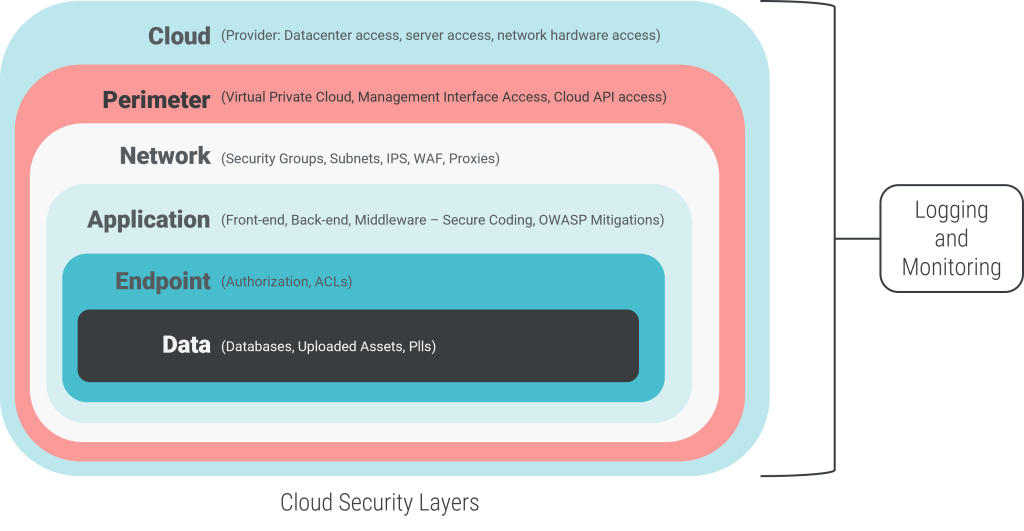

The diagram below visualizes the security layers you will typically encounter when deploying to the cloud.

You can use the diagram above to examine your responsibility and exposure points and adapt your architecture accordingly. As part of contingency planning, list the components of your application that fall within each layer. Then, depending on your responsibility, plan how to prevent a breach or recover from an outage.

As illustrated throughout this tutorial, Pretendco has attempted to ensure that checks are in place at different levels to prevent being exploited. From limiting network access to ensuring encryption to ensuring reliable authorization and authentication is in place.

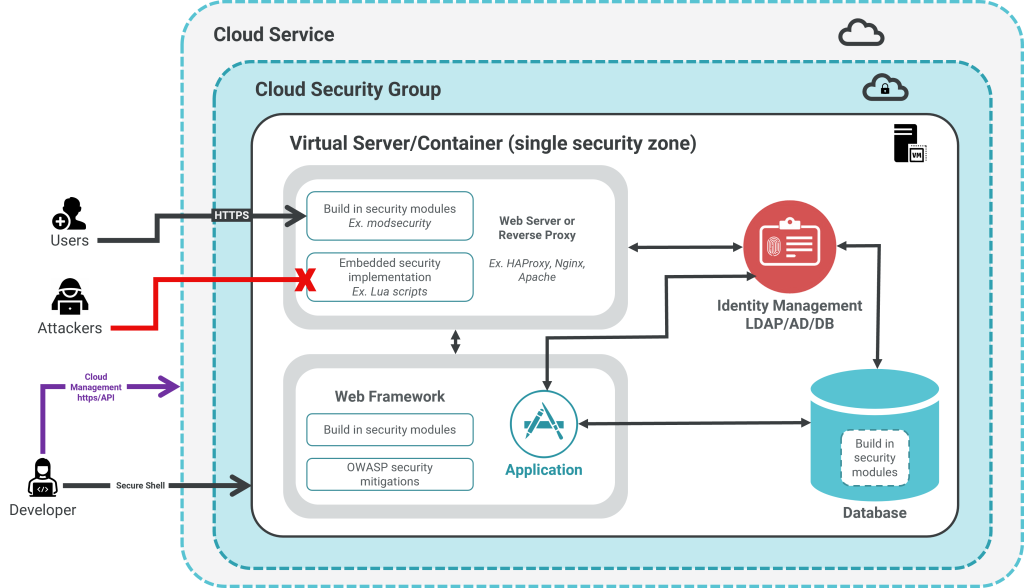

The diagram below outlines how each component can use the items above it to prevent the exploitation of one element from spreading to others, or to prevent a breach in the first place.

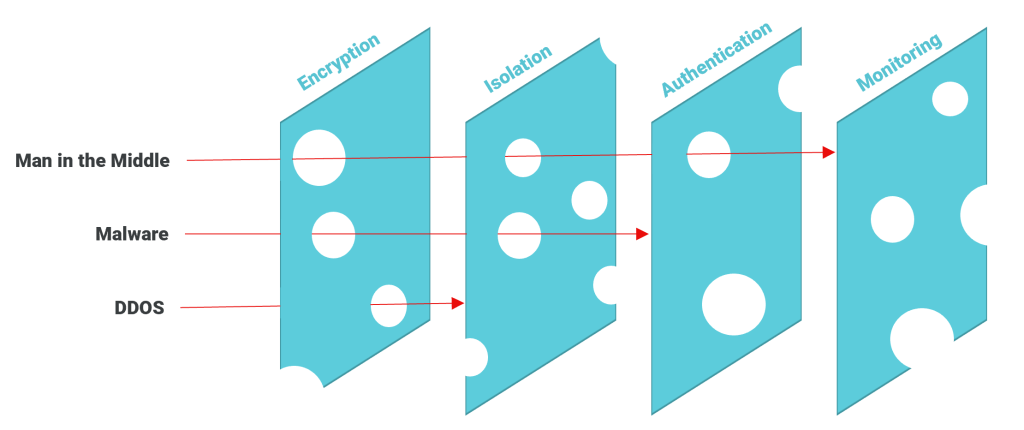

Swiss Cheese Model (Defense in Depth)

We would be remiss not to mention the paradigm that underlies why different actions need to be taken together. The Swiss Cheese Model (also known as Defense in Depth) is a paradigm showing how multiple preventative defenses together can be used to ensure coverage of one defense system’s weaknesses with another system’s strengths. Each step on its own will not completely protect your application, but together each will significantly reduce the likelihood of an attack succeeding.

As you design or modify your architecture, identifying where and how gaps exist with different defense strategies will help you identify what other strategies to deploy to complement them.

For example, Pretendco can quickly identify an error in their configuration management that resulted in a service being erroneously turned on without authentication. However, because steps had been made to ensure the firewall only allowed expected ports (not the port used by the new service) to the wide web, the exposure has been mitigated before it becomes an issue.

Final Thoughts

Security tools and best practices are always changing, but fundamentals still hold true. Encryption, isolation, authentication, and monitoring are the cornerstones of secure architecture in the cloud or in a data centre. Modern security processes are often just extensions of these fundamentals, with automation supercharging their utility. We are able to automate tasks that previously required manual intervention (such as data encryption), and leverage cloud providers who manage large portions of the technology stack for us.

Architecting secure infrastructure is everyone’s responsibility. For it to work effectively, your strategy needs to be supported by policies, procedures, and awareness. Cloud providers offer solutions that share this burden, but you must be aware of your role and exposure points. It doesn’t matter if you build a small one-instance app or a large microservice-based architecture; security planning should be introduced early and reviewed regularly especially as the system evolves.

Monitoring and logging is a key component, one well-served by the marketplace. Finding methods to help you understand what and if an incident occurs can lead to finding ways to automatically remediate more minor issues. Regular tracking and auditing of permissions can alert you to unintentional misconfigurations or unexpected outcomes of other changes.

The risks that cyber-attacks pose to your organization are always evolving, but so too are the tools available to combat them. In the war for your data, cloud providers and the tools they produce are on the front line of defense. However, without planning and implementation, even the most modern security stack will just be a screen door in a hurricane.

Additional Resources

We highly recommend checking out the following resources:

- Cloud Security Alliance Guide

- The Canadian Chapter of the Cloud Security Alliance Guide

- Open Web Application Security Project (OWASP)

- Department of Defence Security Technical Implementation Guides

- AWS Architecture diagrams

- NIST’s Guide to General Server Security

These resources go further into depth and provide more thorough checklists.